This is the first post in a series about bias in data and technology, particularly as relevant to Machine Learning and related technologies (AI, LLMs, etc.).

A friend recently sent me a paper about the different challenges that covert and overt bias present for Machine Learning and Artificial Intelligence (ML/AI) models. This is a distinction I’ve come across in other papers as well, and one that motivates my own research. My research has focused on text data and language technology (the field of Natural Language Processing, or NLP). In one of my papers, I define biased language as written or spoken language that harms people by referring to them in a simplified, dehumanizing, or judgmental manner. I and many other authors writing about biased data and technology have proposed different ways to think about types of bias. One way is differentiating between covert vs. overt bias. The general idea is:

Overt bias is explicit, something that’s easy to pick up on. Think hate speech, derogatory language, and insults that reflect social stereotypes. Covert bias is also stereotypically derogatory and insulting, but in a less obvious way. This type of bias isn’t always something that can be picked up on by looking at one sentence in a dataset or one decision a model makes. Often comparisons need to be made about how a model describes or makes decisions about many different people.

In that paper I read recently, the authors’ examples of bias include calling certain people “lazy” and “aggressive.” They found that the Large Language Models (LLMs)* they studied were more likely to describe a person in this negative and biased manner if the person used what’s termed “African American English” (AAE) than if they used what’s termed “Standard American English” (SAE).** As the name implies, AAE is more associated with Black communities in the US than with other races. The authors state that because the LLMs weren’t given the race of the person who used AAE and SAE, the LLM’s association of AAE with qualities of laziness and aggressiveness is an example of covert bias. I would argue that using a term such as “aggressive” gives an explicitly negative judgment, so this example of bias is actually overt, but I get the authors’ point: bias can be present whether or not an identity characteristic such as race is explicitly stated.

The authors give another example that fits more with my idea of covert bias: they looked at how LLMs judged a person’s likelihood to commit a crime based on an example of text recording something a person said. The authors found that the models they studied judged text in AAE as coming from people more likely to commit a crime than text written in SAE. Here, the LLMs are not told the race of a person, and the biased behavior of the LLMs is determined by comparing LLM predictions on text written in AAE versus on text written in SAE.

This distinction between overt and covert bias is important because overt bias is easier to measure. Current approaches to measuring biases in ML/AI models focus on overt bias, so approaches to minimizing bias miss how models exhibit covert bias. What does this mean in a real world context?

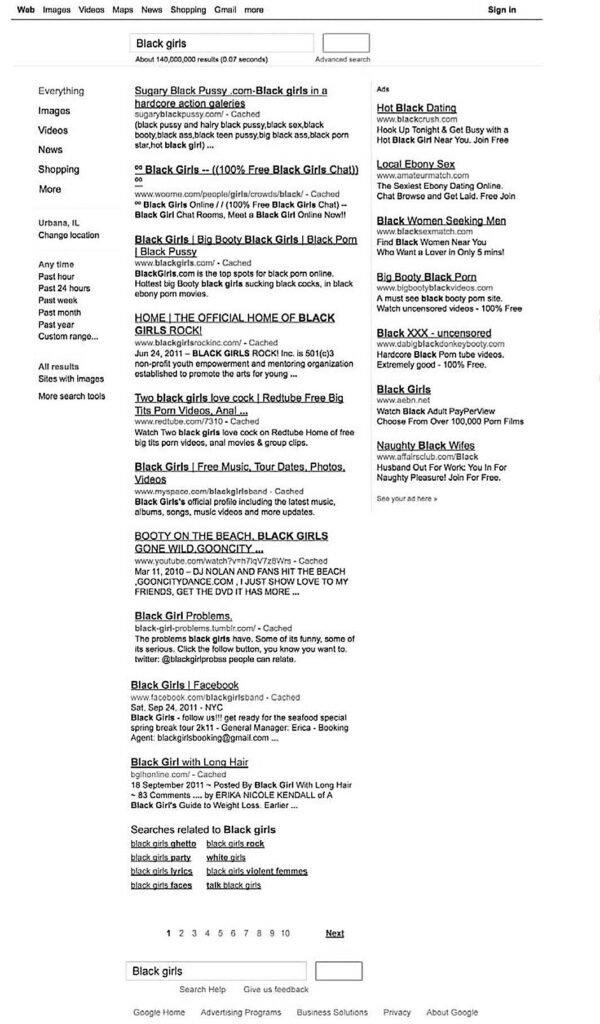

Consider the bias of the over-sexualization of women, particularly Black women and girls. This bias has historical roots that can be traced back to enslavement and colonialism, and continues to impact Black women’s experiences in present-day society. Safiya Noble demonstrated how current technologies perpetuate biases that harm Black women and girls in her 2018 book, Algorithms of Oppression. Noble wrote of how, when she searched “black girls” on Google to help her think of gift ideas for her nieces, Google’s search results presented her with links to pornographic websites. If you search “black girls” on Google today, links to those pornographic websites will not be listed in the search results. At a glance, it seems like Google fixed their search engine’s gender-biased and racially-biased behavior. However, when a friend of mine searched “video call apps” on Google just last year, the top search results linked to websites that guaranteed things like a call with a “hot girl.” (This friend opts out of Google’s search personalization, by the way, so Google could only personalize the search results based on my friend’s geographic location in the UK.)

Going back to the idea of overt vs. covert bias, the search results Noble received demonstrate an ML/AI technology exhibiting overt bias, because gender and race were specified in the search query, and the search results were biased against the specified gender and race. The search results my friend received demonstrate an ML/AI technology exhibiting covert bias, because gender was not specified in the search query, yet the search results were biased against women and girls.

In the tech world, social biases such as gender bias and racial bias are too often approached as problems with technological solutions. Technology does not exist in isolation from people, though. People decide what data to collect. People decide what questions ML/AI models should answer. People decide how ML/AI models should interpret data. People decide how to evaluate ML/AI models’ ability to answer certain questions and interpret certain data. All data-driven technology, including ML/AI models, are socio-technical systems, so any social biases they exhibit require a mix of socially grounded and technologically grounded responses.

I use the word “responses” rather than “solutions” because social biases will always exist. Every person has a unique perspective, based on their education, training, and lived experiences, that shapes how they interpret information and move through society. Social biases are not problems that can be fixed. Social biases are and will be a perpetual challenge. That’s not to say we can’t do anything to prevent their harms, though. What I urge is shifting the focus from mitigating bias to mitigating harms from bias. Rather than trying to create neutral, objective, universal, or generalizable technologies, we should create technologies that incorporate as many different perspectives as possible.

I’m interested in how ML/AI models can help people understand what perspectives have been included and excluded in a dataset or technology system. I’m interested in how these models can help people figure out whether certain people are misrepresented in a dataset or technology system. Once we know which people are excluded or misrepresented, we can take action to include or more accurately represent those people. That’s why I use ML/AI models to communicate bias, rather than trying to eliminate bias from ML/AI models.

*Briefly, Large Language Models (LLMs) are ML/AI models created with text data.

**There is an obvious value judgment being made with the word “Standard” in Standard American English (SAE), but the implications of that deserve a deeper discussion that I’ll dive into in a future post.

Leave a Reply